Linux phones are not automatically secure

A common point in the Linux community is that escaping the walled garden of ecosystems like Android or iOS is already a means to higher security. Having no contact with Google or Apple servers ever again, nor cloud providers ever snooping on your private files or contacts again is a good selling point for the privacy-conscious.

The ability to freely sideload applications, switch and update kernels, plus a deeper knowledge of what data each apps send to remote servers means that total control of our personal device feels finally achievable. And, of course, the mainline Linux kernel is generally safer than the half-baked, often outdated manufacturer kernels ("downstream") that most mobile devices rely on, at least when stable patches are no longer provided.

There is no catch here: the above are perfectly sound points. But while Linux phones can be potentially more secure devices in the entire control of their owners, it is important to realize that most Linux systems are not secure by default, as most distributions prioritize freedom over strict internal isolation. Don't panic, though: we'll go through this.

I would also like to deeply thank Luca Di Maio, infosec professional, author of DistroBox and contributor of VanillaOS, for helping me write and proofread this post.️ ❤️

Modems trust their home

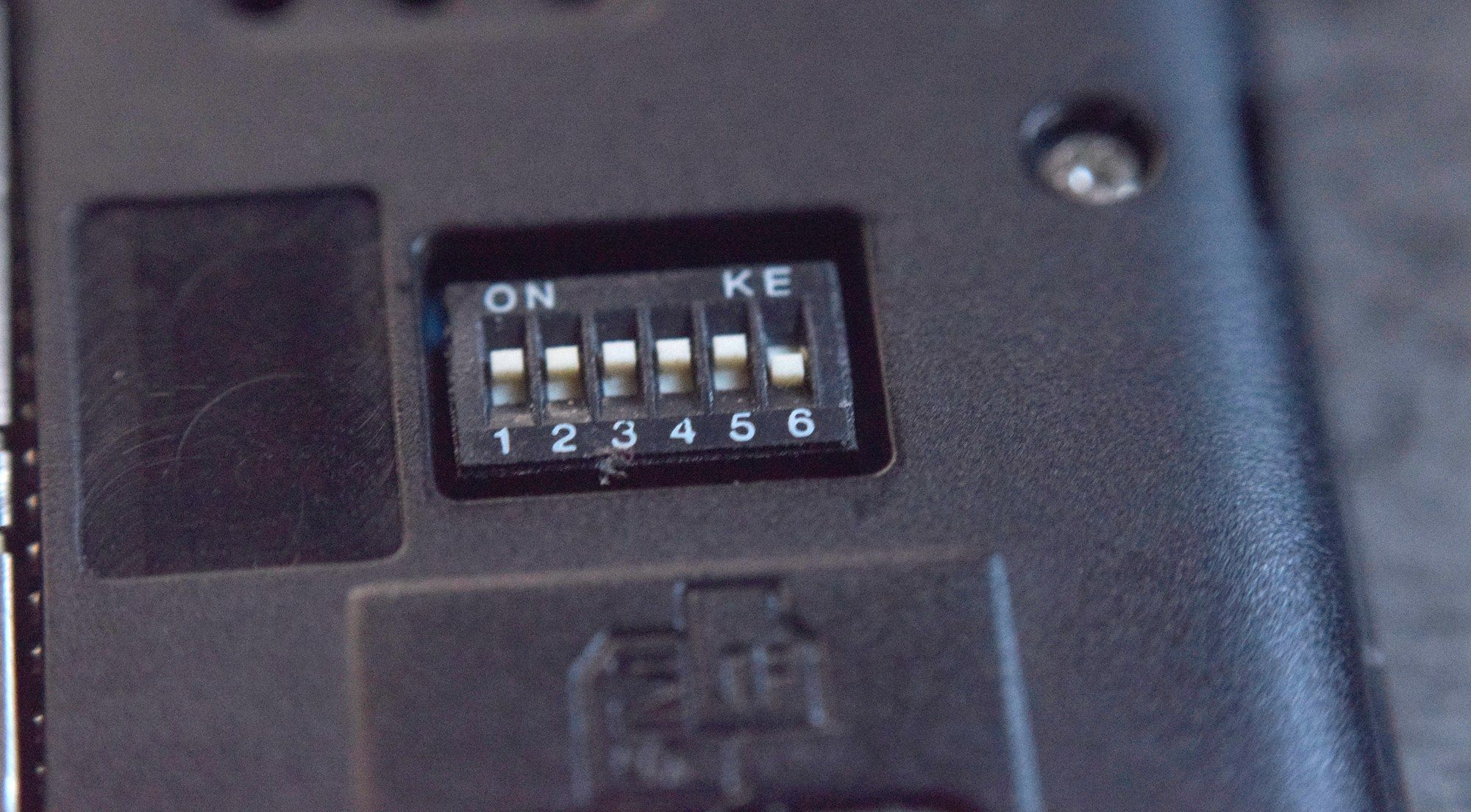

Both Librems and PinePhones adopt a radical solution to prevent the unwanted flow of sensitive data: hardware kill switches. These are an effective, intuitive way to make sure you are sharing only the data you wish to at any point, and make wireless attacks or e.g. sneaky GNSS logging physically impossible. Though of course, with proper privilege separation, a software switch can be just as effective. But what happens once these switches are turned on?

The modem is hooked up via USB on both the Librem 5 (which lets you replace it directly) and on both PinePhones. Consumer phones take different approaches. Up until around 2019, Qualcomm mobile SoCs featured the modem as a built in component, sharing the same memory address space and utilising standard MMU security features as well as custom XPU hardware to provide protection in both directions. With the advent of 5G, the x55 and x65 modems are instead hooked up over PCIe, with the modem running it's own Linux kernel, presumably in order to hit the required speeds, and so that the same modem can be shipped on X86 laptops and other devices.

Whilst Qualcomm's approach of having the modem and CPU share the same address space does enable the potential for devastating exploits, the amount of protection (see that XPU hyperlink above) makes these integrated modems arguably more secure than what you get on a PinePhone or Librem 5 - depending on how much you trust Qualcomm.

When there isn't a "chain of trust" involving the modem, and when the modem includes its own storage (i.e. on the PinePhone and Librem 5), it is usually possible for this to be externally tampered with. This may involve e.g. reflashing by malicious agents with root permissions on the device, or through physical access.

There are some benefits to this. For example, the PinePhone lets you flash a custom, open-source firmware on its modem (which, being illegal in some countries, we report just for educational purposes). This allows for better trust in one of your phone's most critical mobile components, but at the same time, it means any bad guy with physical device access will be able to reflash it.

Some pedants might insist that the PinePhone modem "open firmware" isn't actually open source: the actual baseband component which implements the lowest level of communication runs on a Qualcomm DSP and is proprietary. Whilst this is an important distinction, it fundamentally misses the point of articles like the above, and only serves to diminish the fantastic work done by FOSS developers to continue pushing for user ownership and control of their devices. We hope that other reports will nonetheless continue to be clear about this fact, as Hackaday is thankfully in the above article.

Sandboxes and granular permissions

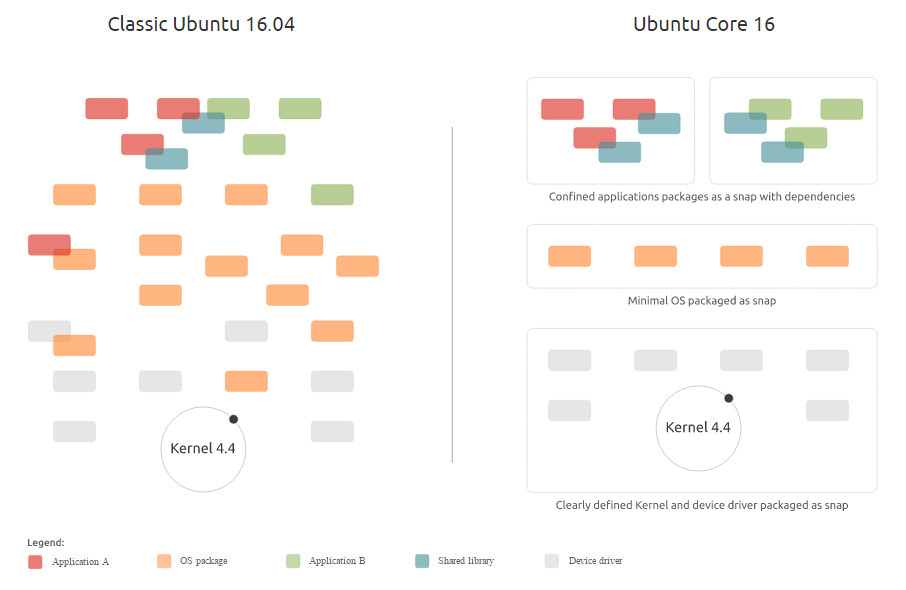

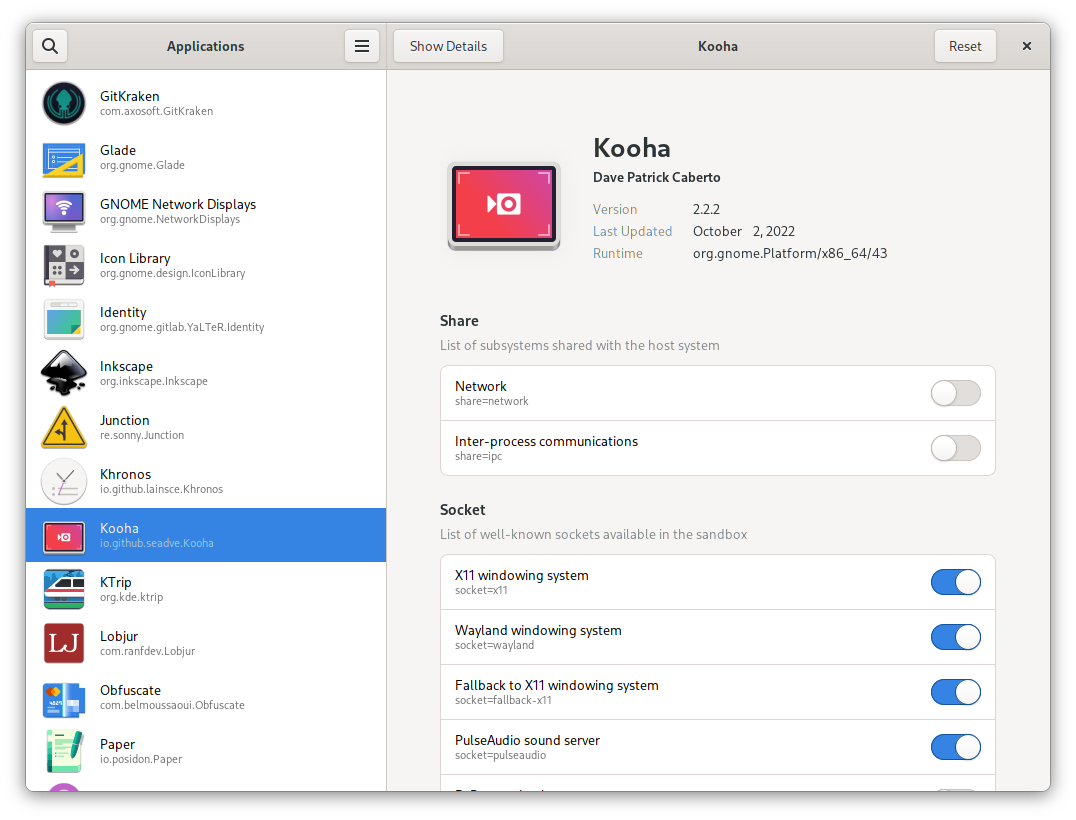

By default, user-space software loaded inside a Linux device follows the standard UNIX paradigm, and is trusted to access all parts of the system of the user who runs it. Granted, if you installed and executed something, that implies that you probably trust it in the first place. But one binary executed outside the sandbox is enough to compromise the entire userspace, and per-app sandboxing solutions such as Snap and Flatpak are only now starting to become popular.

The exceptions in this case are projects such as Fedora Silverblue, which use a Flatpak runtime to confine even core components and prevent unwanted access. Using Silverblue, however, still carries some compromises on actual usability - as does deep sandboxing in general.

Immutability, and read-only system partitions

On the same track, immutability in Linux systems has been under the spotlight in recent years. When I spoke to Luca (see above) at LAS 2022, he explained me on the fundamental benefits of immutability and why it should be implemented more widely in Linux systems. As Red Hat explains, immutability is quite intuitive to understand.

What does "immutable" mean? It means that it can’t be changed. To be more accurate, in a software context, it generally means that something can’t be changed during run time. [...] In Silverblue’s case, it’s the operating system that’s immutable. You install applications in containers (more on this later) using Flatpak, rather than onto the root filesystem. This means not only that the installation of applications is isolated from the core filesystem, but also that the ability for malicious applications to compromise your system is significantly reduced.

— RedHat, 2019

This works essentially by "freezing" all non-sandboxed parts of the system. A better explanation can be found here:

The main two reasons for immutability in mobile systems are guaranteeing atomic updates, in order to maintain a stable system and allow "snapshots" and rollbacks, and the ability to sign the boot and system images, as dm-verity does on Android.

Basically, by signing the critical areas of the filesystem and verifying at boot, Android and iOS phones ensure that the system has not been modded, er, compromised, and if a serious root exploit disables the read-only mode on system components and overwrites them, the device will not be able to boot until it is reflashed. On the other hand, a "simple" read-only system partition is slightly less solid, because a root exploit could acquire write access and compromise the system permanently.

Aside from Android, read-only system partitions are also the default e.g. in Ubuntu Touch.

Immutability brings some undeniable limitations, as with e.g. ostree, installing system-wide binaries requires "snapshots" of the partition, rebooting the system often, and can become painful if these images need to be signed. So take the above example of immutability-plus-read-only-plus-signatures as the valhalla of immutability, and not something that the normal Linux mobile user should necessarily pursue. At least in it's current state.

Busy sandboxes: Thousands of users

As we mentioned, non-sandboxed apps in a standard Linux user space can do everything at some user permission level. That is, access the entire filesystem for that user, data from other processes, install and autoload executables, and so on.

This has been the common practice also in most desktop systems up to shortly ago, and theoretically the user had to trust every binary independently to be good, and not snoop on them.

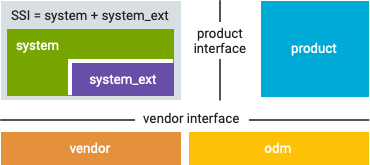

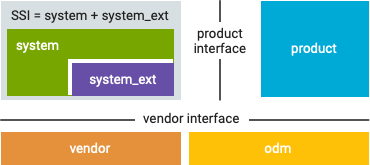

Following the practice of many modern containers, every Android app has an unique UNIX user. This sort of system-level isolation has always been popular in containers like Docker, Flatpak or podman, which are based on the same principle.

In a UNIX-style environment, filesystem permissions ensure that one user cannot alter or read another user's files. In the case of Android, each application runs as its own user. Unless the developer explicitly shares files with other applications, files created by one application cannot be read or altered by another application.

— Android Open Source Project

More on this topic can be found in Android's official documentation:

Tracking snoopy peripherals

This is not exclusively a Linux phone issue, because not even most commercial mobile OSs do permission tracking in a proper, "fair" manner.

For instance, Android asks user-installed apps for granular permissions, yet lifts an exception for the proprietary Google Play Services suite, treated as almost system-level, but at the same time fully proprietary. In fact, the Google Play Services runtime is allowed to do essentially any operation silently, such as installing or overwriting new software, accessing software and hardware permissions, and so on.¹

Google Play services automatically obtains all permissions it needs to support its APIs. However, your app should still check and request runtime permissions as necessary and appropriately handle errors in cases where a user has denied Google Play services a permission required for an API your app uses.

— Google Developers docs

On the Linux side, much progress has been made on filesystem and peripheral permission tracking in the last 2-3 years. For instance, webcam and microphones can now be funnelled through PipeWire, and every application accessing this hardware will need to go through it for permissions. Furthermore, thanks to Wayland, your display's pixels are not in "public domain" any more, and each application is allowed only to see its own rendered screen unless explicitly authorized.

Regarding Flatpak, the new-ish XDG Desktop Portals allow interfacing Flatpak applications with system-level hardware smoothly, allowing for fine-grained authorization, and possibly showing Android-like dialogs for specific authorizations. This is not yet a foolproof sandboxing system, as e.g. full user-space filesystem level is often granted to applications, but it comes very close.

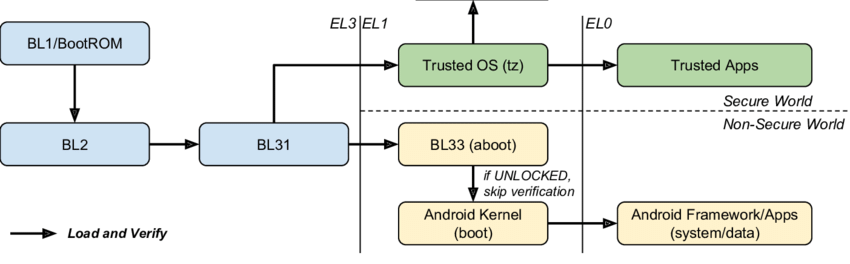

Open ≠ Unsigned Bootloaders

As some will know, we do advocate for free bootloaders – in fact, it's one of the main points that should be legally regulated in order to let users use their hardware as they wish. However, bootloader signatures should be a possibility also on free hardware, not as an imposed thing by the maker or device carrier, but rather as a feature to enable if needed.

By uploading their custom keys, users in security-critical situation could flash only an image they personally compiled and authorized to their Linux mobile device, and prevent overwriting from malicious agents.²

This is at the end of our list, however, as it is often easier said than done. While most mainstream phones could (and sometimes do) offer something like this without much effort, on custom-built Linux mobiles this requires a custom secure bootloader reimplementation with boot key sideloading, a chip-level trusted boot framework, and more critical stuff than it would make sense to have.

Most Linux phones currently encrypt the user space data partition to make it useless to attackers, but being – thankfully – fully unlocked, they do not prevent e.g. unsigned reflashing of the system partition while the device is turned off.

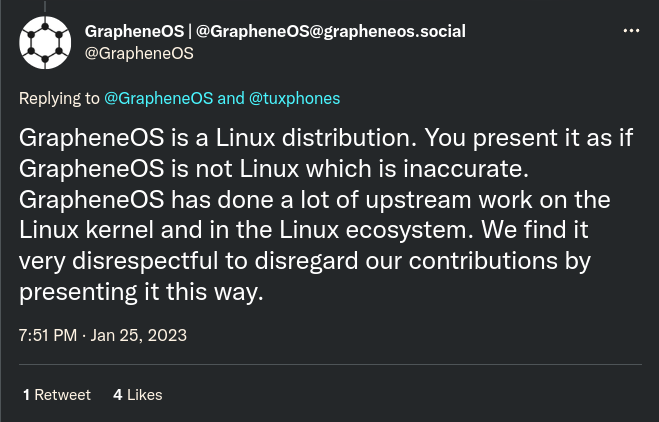

Google's lineup of Pixel phones are often touted for their support of custom AVB bootloader keys which, among other good practises, puts them in the elusive category of devices supported by the privacy-oriented GrapheneOS, who include many extra software features to offer a much greater level of privacy and security. GrapheneOS were unhappy with a previous version of this paragraph describing them as an Android ROM, a common term used by the community to describe any modified Android/Linux (or, as I've taken to calling it Android + Linux) system. We'll let this tweet speak for itself.

Basically, you upload your own keys to the Pixel device, and then have a custom trust chain, allowing you to use your own (or a third party's) signed images. Every element in the chain can verify the next one before loading it and yielding control, allowing every component in the system to be "proven" to be unmodified. (More on boot trust chains can be found here)

Coming to the end, Linux phones might be indeed the most respectful option for their users when it comes to privacy, and user space sandboxing is making the Linux platform more trustworthy than it has ever been. But when "hard" security becomes a requirement, a Linux phone in its default configuration is far from foolproof, and taking additional precautions is the only way to harden one's device and guarantee a truly safe (and more mature) usage experience.

On the other hand, the default configuration of most distributions is tailored to provide users with freedom to play around with their mobile device without too much overhead or scary warnings, and adjust their features to their needs to a degree that has never been seen to this point. So keep your device safe from all the darkness out there, and happy hacking.

[1] See also Google Play Services - Package Index

[2] An example of how to sign LineageOS builds with AVB keys can be found here

Comments ()