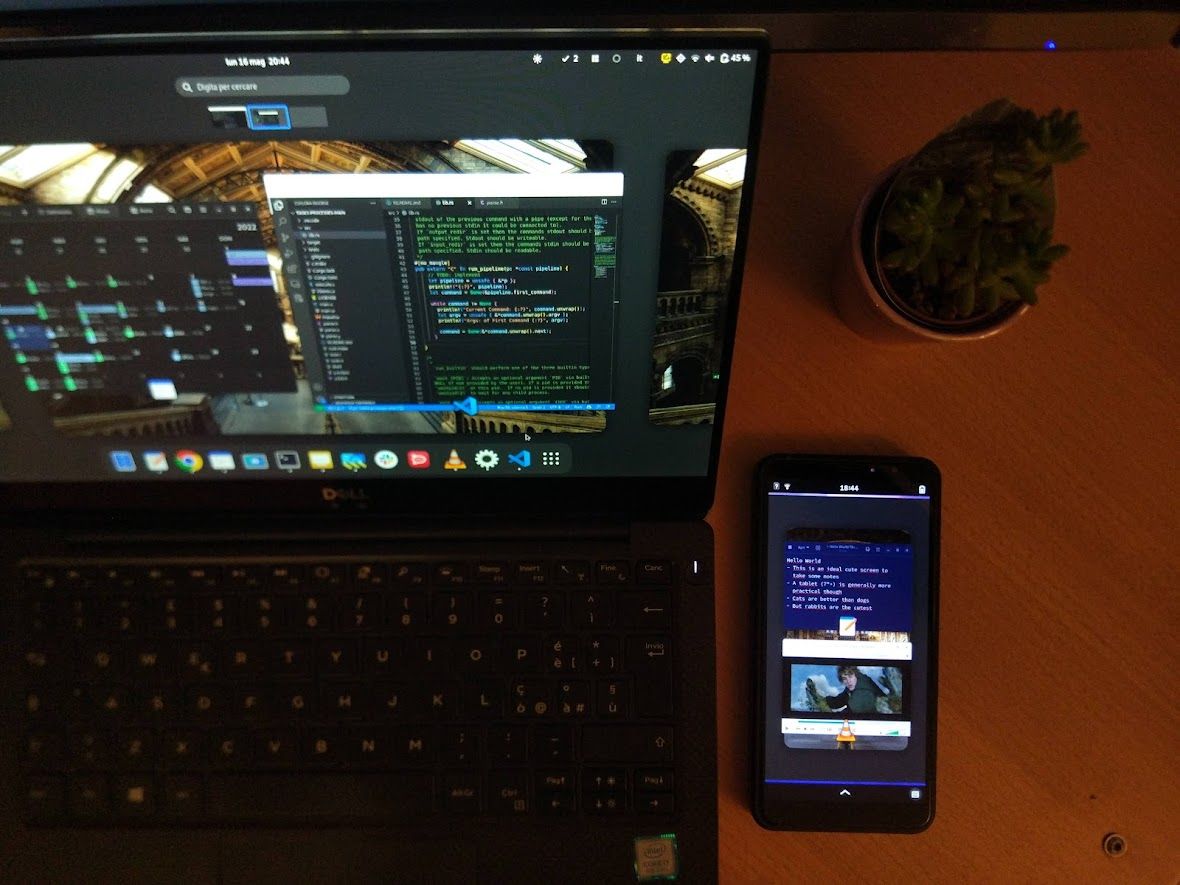

Using a Linux phone as a secondary monitor

As a software developer on the go, one of the very first use cases that I started investigating after installing Linux on my first tablet was that of using a portable device as a secondary display for another Linux machine. Ideally, this would happen wirelessly (or wired, if that involved lower power consumption), with unnoticeable delay, and - why not - even including real-time touchscreen input.

The journey, however, took longer than planned. Existing solutions, like VNC, tend to be strangely laggy, and others, like Miracast, are so deeply enclosed in proprietary protocols that they do not scale well to a bug-free experience on all devices. The first solution to this problem, at an even less stable stage than currently, was my most shared post ever on Twitter, so I decided to write a post on how this was done.

why is everyone liking my hacks

~ me, 2022

To start, let us split this problem in even smaller bits. Namely, components are:

- Virtual display handling: creating a fake video output. On Xorg, some GPU-specific hacks exist (probably Intel-only) vs Wayland (mutter)

- Video capturing: we assume that the windowing system grants enough permissions to capture the screen

- Video streaming: This, of course, needs to be fast. So real-time stream compressing and decoding is necessary.

- Network: This one is easy, at least on our side. By replacing most known network overhead (e.g. not relying on central Wi-Fi APs, getting rid of TCP packets, ...) and transmitting bare-bones UDP packets from point to point, we achieve significant gains in performance. In fact, even dropping wireless entirely and using Ethernet over USB (which e.g. postmarketOS supports) could further reduce latency.

- Display / decoding on host machine: as we said, needing fast video decoding is not obvious in the hellish landscape of fragmented ARM SoCs, and their many video decoders, some running over proprietary wrappers (e.g. Adreno), some over hacky adaptions of Android userspace (e.g. hybris), some supporting a ridiculously small subset of features in mainline (e.g. old Nvidia Tegras), and some not supported at all. Furthermore, with most ports using Android drivers, this needs to be reproducible on myriad of kernels also without mainline kernel!

- (Optional) input device handling: to get and transmit touchscreen events smoothly on the receiving screen, and map them as a real touch input on the host device. A little spoiler: I did not get here yet, and will not any time soon.

Decent reliability, easy reproducibility (even on downstream kernels) and acceptable latency are required.

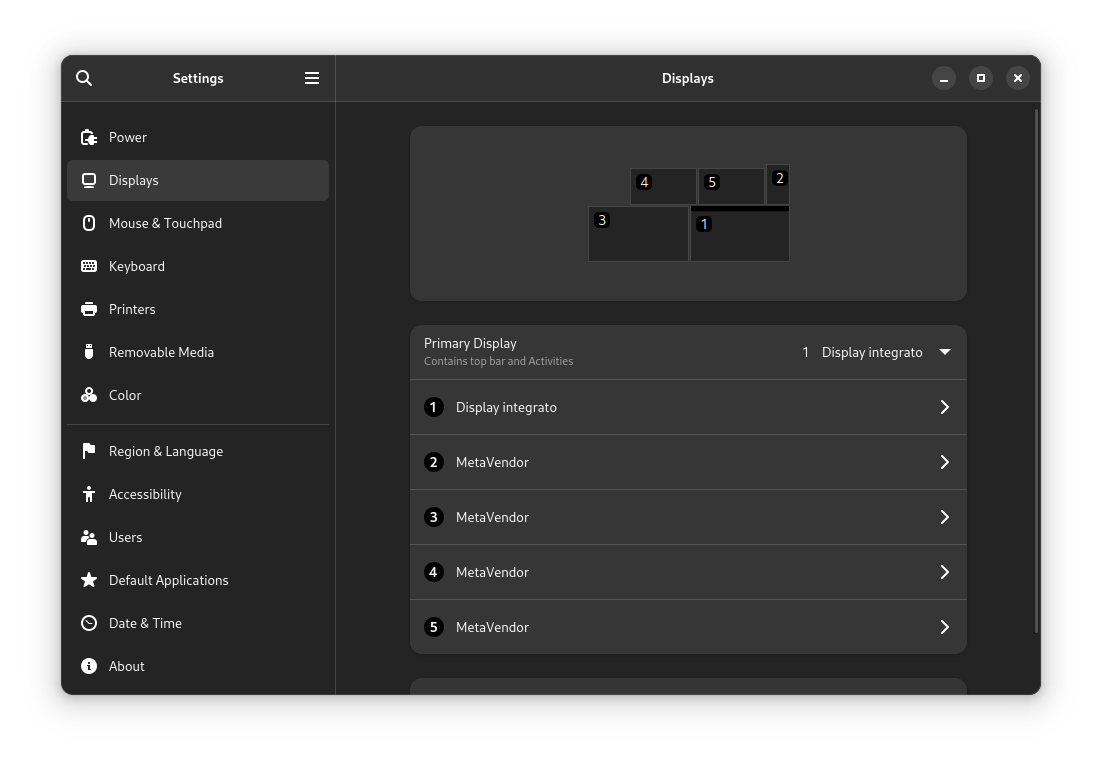

The off-shelf solutions for streaming existing desktops (but not a virtual desktop) with are endless, and they include Deskreen (which communicates over WebRTC), or more simply an almost hidden feature in VLC that enables streaming and transcoding of the desktop, simple VNC, and a myriad of open-source (X11, often semi-abandoned) and proprietary streaming apps. However, not all devices have a hardware HDMI port, and bringing a fake hardware adapter to trick the GPU into enabling a second video sink is chaotic to say the least. The alternative, at this point, is to use a Mutter-based desktop, or any other window manager with some sort of built-in interface for creating virtual screens in software.

After a few failed attempts, with latency in the range of seconds, I am starting to see some light. By getting rid of as many layers as possible, minimizing the overhead, and just trying the simplest hacks for raw streaming, performance is getting surprisingly acceptable: at 5Mbps bandwidth, this means over 10 FPS, and latency is probably around 200ms over good connections.

Prerequisites

- In particular, this solution requires the Mutter window manager (40+), so GNOME, Elementary, Budgie or other Mutter-based desktops. This clean D-Bus API is also an unified solution for virtual monitors between Wayland and Xorg. Theoretically, virtual monitors have also been supported by other window managers like KDE and Sway for a while, so adapting this solution should be relatively easy.

- You will also need a PipeWire enabled stack, so a very modern distribution such as Fedora 35+ or Arch Linux. In theory, you can use PipeWire for video also on a system using the now-legacy PulseAudio for handling sound

- Wi-Fi, working USB Ethernet, or any other network with acceptable latency

- Finally, hardware video acceleration on the receiver side is a must. Plain CPU video decoding will either lag behind the stream, or consume a lot of power in the process, or both.

Current st(h)ack

I created a Python script based on an older Mutter API demo script. This was modified to have bare-bones streaming, and requires the following D-Bus and command line interfaces:

- Mutter: to create a virtual display

- PipeWire: to manage the stream

- GStreamer: to compress/send/receive video between the two devices.

In particular, two commands are used. On the sender:

pipewiresrc path=%u !This starts the stream from PipeWire%s videoconvert !...and transcodes to x264 video streamx264enc tune=zerolatency bitrate=5000 speed-preset=superfast !rtph264pay !...encapsulates the streamudpsink host=... port=......and transmits it to the host via "raw" UDP

On the receiver:

DISPLAY=:0is optional, but allows invoking the script e.g. from headless sessions such as SSH. The display identifier may clearly differ (:1, :2, etc.)gst-launch-1.0 udpsrc port=... caps="application/x-rtp, media=(string)video, clock-rate=(int)90000, encoding-name=(string)H264" !This receives the UDP streamrtph264depay ! avdec_h264 ! videoconvert !...decodes it,autovideosink sync=false...and starts an auto-detected compatible video sink

Remember to install gstreamer1.0-tools or the equivalent package on the receiver.

How to test (pre-alpha)

Again, this is just the earliest working experiment. However, if you are not worried about this script probably not working in your case, here is the testing procedure:

- Download the script linked at the end of this post,

- Establish a network connection, point to point (aka: "computer to phone") if possible to minimize the switching and routing delay of far away access points.

This can be done either via USB Ethernet, or more simply via Wi-Fi (e.g. with one device in AP/hotspot mode, and the other connected to it). Using "standard" Wi-Fi with an external router is also possible, but results in unreliable latency of around 0.5-1.5s. - Get the (local) destination IP address of the receiver via

ifconfig, or an equivalent, and run the command on the sender:

python3 gnome-virtual-cast-and-stream.py -v {width} {height} {destination IP} - Copy the "receiving command" from the console, and execute it on the receiving device, e.g. through SSH. You may need to adapt or remove the DISPLAY=:0 variable.

Results

This solution, which should minimize overhead to reasonable levels, depends highly on the decoding capabilities of the receiving devices:

- On CutiePi Tablet (Raspberry Pi CM4), using open-source drivers, this tool worked perfectly

- On a mainline Linux phone (Purism Librem 5), the virtual monitor experience was equally solid

- On an Intel i5 tablet, this solution also worked flawlessly

- However, on some older Qualcomm devices (tested on Snapdragon 820) this was seemingly much slower and glitchy. This is possibly due to the mainline Linux drivers for the Qualcomm Venus (V4L2) video decoder being immature, although replacing

avdec_h264withv4l2h264decin the command above should enable Venus-based decoding. (– thanks Yassine for the suggestion) - On a Linux device with no video decoding or hardware acceleration, this will be inevitably slow

Found a way to have almost zero-latency, wireless video streaming on a virtual secondary screen, i.e.: using a mainline Linux tablet (with working GPU) as a portable second screen for your Linux desktop.

— TuxPhones (@tuxphones) January 30, 2022

The amazing @cutiepi_io (review next week!) is seen here for this purpose pic.twitter.com/HpfYc7NE5e

Generalizing

Needless to say, the priority would be to find ways to generalize this procedure to a wider share of Linux devices:

- KDE: This should already be possible at the moment. PipeWire and screencasting APIs are indeed present, and Kwin should support creation of virtual outputs to some extent

- Sway: there is an issue for this, and it may become possible in the future

- To X11-based desktops: This is much simpler, although messier, than on Wayland. Creating virtual screens is supported, for example, by Intel GPU drivers, and screen capturing on this virtual sink is possible also without Mutter.

However, in my experience, Xorg streaming is considerably glitchier, maybe due to the different buffering mechanism; - To (possibly?) even lower overhead: Using network via USB, rather than Wi-Fi as in the demo seen above. Removing or replace the x264 codec for a faster alternative, reducing the latency of encoding and decoding the stream at the price of possibly higher bandwidth and power consumption;

- To Linux devices without GPU drivers and/or video acceleration (e.g. many Android phones with basic Linux support): replacing x264 with a raw stream might work better for CPU rendering, but it will remain a relatively painful and power-consuming experience;

- To many virtual screens: This works already as simply as launching several instances of the script on the transmitter, each pointing to a different target IPs and port. Provided that the wireless bandwidth is enough, and the higher power consumption is not a concern.

Conclusion

The road to a universal, usable solution is still long, but this is the first footprint of a working solution for the majority of devices and distributions. For the moment, a 100-line Python proof of concept is linked below.

Comments ()